Abstract

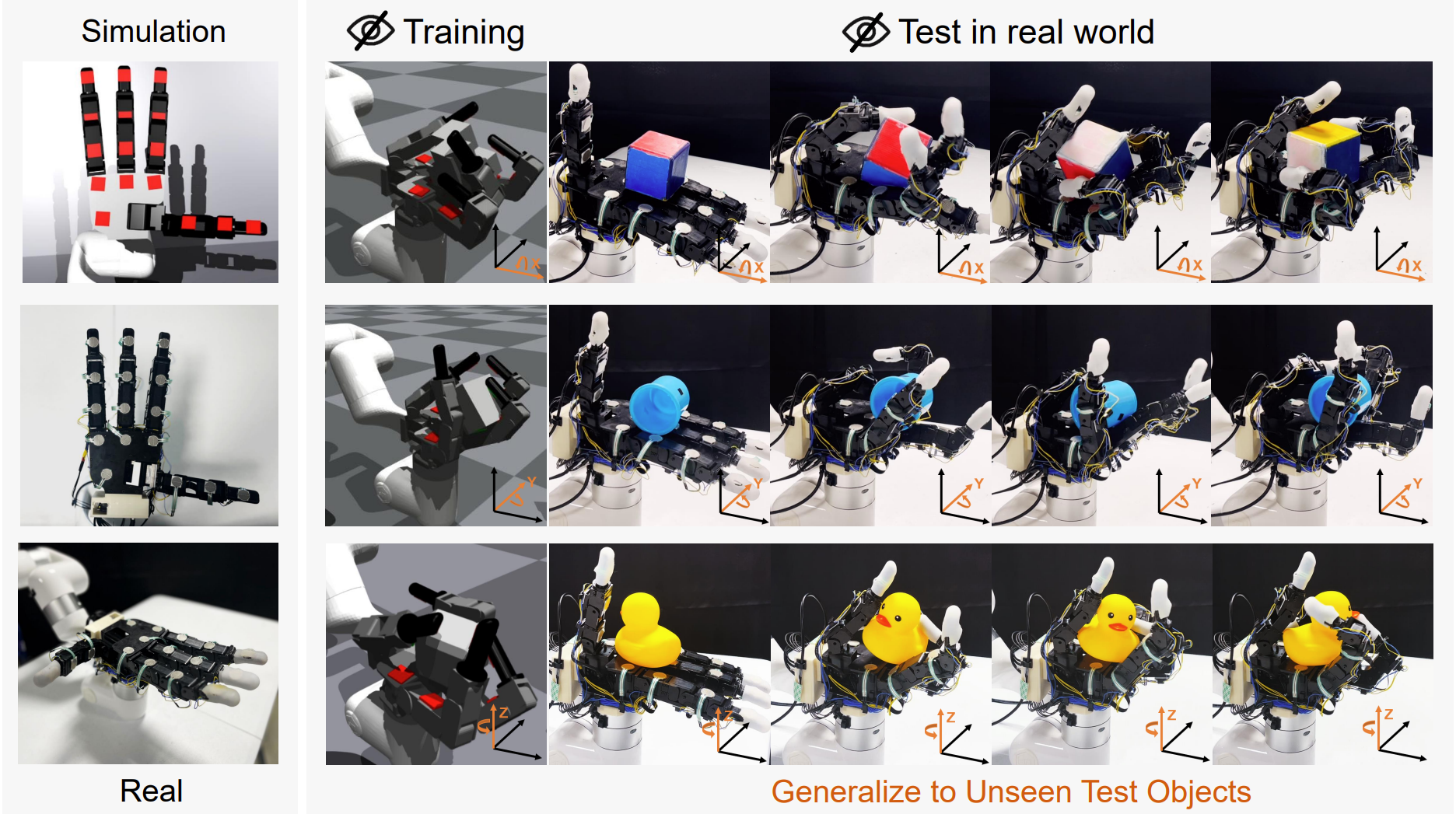

Tactile information plays a critical role in human dexterity. It reveals useful contact information that may not be inferred directly from vision. In fact, humans can even perform in-hand dexterous manipulation without using vision. Can we enable the same ability for the multi-finger robot hand? In this paper, we present Touch Dexterity, a new system that can perform in-hand object rotation using only touching without seeing the object. Instead of relying on precise tactile sensing in a small region, we introduce a new system design using dense binary force sensors (touch or no touch) overlaying one side of the whole robot hand (palm, finger links, fingertips). Such a design is low-cost, giving a larger coverage of the object, and minimizing the Sim2Real gap at the same time. We train an in-hand rotation policy using Reinforcement Learning on diverse objects in simulation. Relying on touch-only sensing, we can directly deploy the policy in a real robot hand and rotate novel objects that are not presented in training. Extensive ablations are performed on how tactile information help in-hand manipulation.

Video

Touch-only Dexterous Manipulation System

We present a new dexterous manipulation system design and learning pipeline for in-hand rotation using only touch. Our system is able to rotate various real-world objects around different axes without seeing them. The hardware is consisted of an Allegro robotic hand with 16 low-cost force-sensing resistor (FSR) sensors. We train a control policy to rotate multiple objects in the simulation through RL. Then the learned policy can directly transfer to the real and generalize to unseen, novel objects.

Hardware

Our tactile perception system consists 16 low-cost force-sensing resistor (FSR) sensors. The touch sensing array can provide feedback on critical hand-object contacts and help the hand understand the shape, ensuring the success of complex in-hand object manipulation.

In-hand Object Rotation In The Dark

Since our system does not rely on vision, it can perform in-hand manipulation in the dark.

This offers great advantage for the application of dexterous manipulation system in complex real-world scenarios, where obtaining reliable visual input is hard.

Rotating Different Objects

Our system is able to rotate a large variety of real-world objects around different axes. Some examples are shown below.

Enabling Human-Shared Control

Combining various learned object rotation primitives, our system naturally provides a convenient human-shared control interface.

In the following example, a human operator uses a keyboard to control our system to reorient an object.

Understanding Shapes through Touch

To understand how touch information could be helpful, we conduct a study from a shape understanding perspective.

We train a neural network to predict the object's shape from the rotation rollout.

Then, we use it to predict a novel object's shape from its rotation rollout.

We find that reconstructing the object's shape accurately is possible only if the touch information presents in the rollout.

Media Coverage

BibTeX

@article{touch-dexterity,

title = {Rotating without Seeing: Towards In-hand Dexterity through Touch },

author = {Yin, Zhao-Heng and Huang, Binghao and Qin, Yuzhe and Chen, Qifeng and Wang, Xiaolong},

journal = {Robotics: Science and Systems},

year = {2023},

}